This article explores how scientists learn to detect ‘pre-cancer’, a new diagnostic category defined by the risk of developing the titular disease. This process entails the observation of ‘raw signals’ that stand for potential molecular and metabolic changes in animal and human tissues and their validation as ‘candidate biomarkers’. I draw on ethnographic fieldwork conducted alongside a multidisciplinary group of researchers—physicists, biologists, mathematicians, computer scientists, and engineers, among others—all of whom worked as part of a research programme investigating the early signs and detection of cancer in the UK. ‘Signals’ detected through scientific experiments are intimately entangled with the sensing technologies and analytical techniques used. As previously unknown microscopic realities emerge, scientists seek to negotiate the uncertainty surrounding the identification and validation of signals as candidate biomarkers before they can be tested in clinical trials.

Learning to See Cancer in Early Detection Research

—

Abstract

Introduction

The study of pre-cancerous lesions and their evolution is gaining increased attention and investment in the United Kingdom (UK). Scientists and health professionals see the potential of accurate diagnoses and less invasive treatments if they can gain a deeper understanding of the genetic makeup of our bodies and other intra-cellular metabolic features (Tutton 2014). As part of a movement towards what has been dubbed ‘precision medicine’, the molecularisation of clinical research is currently taking place through the development of a microscopic gaze. This gaze understands and ‘visualises life at the molecular level in terms of genes, molecules and proteins’ (Bell 2013, 126) and understands it as a set of ‘intelligible mechanisms that can be identified, isolated, manipulated, mobilised, and recombined in new practices of intervention’ (Rose 2007, 6).

The understanding of illness and health through the lens of molecular interactions sustains the idea that we might be able to collectively prevent suffering and reduce healthcare costs if we are able to catch progressive and degenerative clinical conditions in people’s bodies before any symptoms appear. This molecular gaze not only affirms an extended temporality of medical conditions, it also adds a multidimensional consideration. Understanding health and illness at the molecular level leads us to perceive that ‘dangers come from everywhere’ and that ‘everyone is potentially ill and no one is truly healthy as everyone has a particular risk factor profile that can be managed by a vigilant medicine’ (Armstrong 2012, 411).

Richard Milne and Joanna Latimer argue that, in the case of dementia, ‘[t]he political-scientific-clinical approach to Alzheimer’s disease co-produces dementia as a future that should be considered, engaged with and acted upon in the present by individuals and societies’ (Milne and Latimer 2020, 2). In this context, identifying and measuring disease susceptibilities produces a shift that pre-symptomatic patients might experience as a ‘measured vulnerability’ mobilised in terms of numbers or blood counts, which galvanise patients’ further engagement with diagnostic tests and thus stabilises uncertain health statuses (Gillespie 2012). Thus, early detection practices advance an understanding of the susceptibility to diseases based on the disease in question’s disruptive potential vis-à-vis the potential of alleviating the suffering of those affected.

In this context, I frame early detection as a ‘boundary object’ (Löwy 1992), a roughly defined epistemic space within which professionals from multiple disciplines come to generate various kinds of knowledge. I delve into the knowledge-making practices through which scientists seek to bring together a fine-tuned understanding of the natural histories of cancer to dispel uncertainties about where, when, and how certain cancer susceptibilities emerge.

In the mining of this loosely defined space of uncertainty surrounding the markers of risk, early detection research scientists drive the development of diagnostic categories. By transforming the usual markers used to define the onset of progressive medical conditions in terms of symptoms (Armstrong 2012), scientists inform what constitutes ‘cancer risk’, bringing about pre-symptomatic realities to be managed in the clinic (Aronowitz 2009). The medicalisation of those pre-symptomatic realities in turn informs the meanings that one attaches to particular illnesses—for example, it defines whether a given illness is ‘devastating’ or not (Milne and Latimer 2020)—and impacts upon the ways in which people make sense of their bodies and their futures (Bell 2013; Gibbon 2007). As Samimian-Darash and Rabinow have argued, technologies of knowing used to manage uncertainty enable us to see ‘what truth claims are advanced about the future, what interventions are considered appropriate, and what modes of subjectivity are produced’ (2015, 4).

Stepping back from the social realities that emerge when risk stratification practices are communicated and acted upon in the clinic, I am interested in the ways in which pre-clinical scientists engage with experimental systems so as to learn to see pre-cancerous lesions in the first place. I draw on 18 months of fieldwork as part of a well-funded research programme that developed in a British university over the last decade. Bringing together researchers from various disciplines—ranging from molecular biology to chemistry, physics, medicine, engineering, computer science, and others—as well as a network of laboratories, translation clinics, and other public and private research centres in the region, the programme aims to discern the contours of pre-cancer and cancer risk as emerging epistemic categories.

To model processes that characterise tumour evolution in laboratories, the early detection scientists I met seek to identify molecular and metabolic ‘signals’ involved in tumour initiation and growth. Such scientists articulate a kind of reasoning that uses ‘experimental systems’ to ask new questions (Rheinberger 1997) and figure out how their answers—the signals they see—‘translate’ across domains (e.g., from mathematical models to petri dishes, animal models, and human beings [Svendensen and Koch 2013; Friese 2013]). If robust, those ‘signals’—outputs detected by sensing technologies—can then be validated as ‘biomarkers’ that can potentially be used to measure and target evolving health conditions in the clinic.

Understood as quantifiable analytes or substances in patients’ bodies, biomarkers are increasingly incorporated into decision-making algorithms to enable scientists and health professionals to predict tumour evolution, stratify those who might benefit most from specific treatment options, and distinguish those who might benefit from surveillance but not treatment (Blanchard and Strand 2017). As I have shown in the case of PD-L1, a molecular biomarker deployed as a diagnostic test to determine a patient’s eligibility for the immunotherapy pembrolizumab (Arteaga 2021), molecular biomarkers are endowed by scientific communities with prognostic as well as predictive capacities.

By contributing to the anthropological analysis of those scientific practices that are articulated in early detection spaces to inform novel diagnostic categories of pre-cancer and cancer risk, I seek to shed light on what is at stake in so-called processes of the ‘discovery’ and ‘validation’ of those biomarkers that help scientists decipher the natural histories of cancer.

Between 2019 and 2021, I immersed myself in the academic and research spaces that early detection scientists frequented, both face to face and virtually while the COVID-19 pandemic unfolded in the UK. In the first year, I enrolled in a lecture and seminar series on cancer biology and early cancer detection to familiarise myself with the approaches used across the research programme. A few months after, I joined research meetings and journal clubs in laboratories and office rooms. I also attended dozens of events on the topic of early cancer detection to delve more deeply into scientists’ specific projects, coming to grips with how they communicate their research to each other in purposely multidisciplinary initiatives.

Twenty-five scientists from six different groups agreed to discuss their research projects with me. On numerous occasions, I watched parts of the experiments they were working on in the labs. At other times, scientists preferred to discuss with me the processes by which they arrived at their results. I also analysed the pseudonymised multidisciplinary fieldnotes I wrote throughout my fieldwork, looking for a way to articulate the most common challenges that scientists faced in their work to see cancer before it exists.

As others before me have done (e.g., Myers 2015; Nelson 2012; and Ong 2016), I pay attention to the practices through which scientists see and make molecularisation workable in laboratories. I mobilise the idea that learning to see cancer before it exists is a process in which the properties of scientists and epistemic objects are mutually defined (Latour 2004). I show that, to be able to come up with a potentially useful biomarker, scientists must accomplish three different tasks. These I analyse in turn.

First, scientists learn to be affected by microscopic changes of interest in order to ‘find a signal’—that is, to know what they are looking for. Second, they learn to manage the uncertainty that muddles their endeavours when the signals produced by experimental systems are as likely to be real as they are artefacts of the experiment itself. Third, scientists forge a sense of responsibility to critically consider how their research findings are going to be deployed in the clinic and to what end.

However, before I illustrate the details of these practices, some conceptual distinctions must be explained. In the next section, I offer a brief overview of scholarship pertaining to the construction of cancer risk, the production of scientific objectivity, and the articulation of experimental systems and research models.

Technologies of knowing

Scientists only began to observe cancer risk through a microscopic lens in recent years. With the increasing personalisation of diagnostic and treatment approaches in medicine (Day et al. 2017) and in line with the continuous efforts to harness the potential of genomics for the surveillance of health as a mode of ‘anticipatory care’ (Tutton 2014, 159), cancer risk has been increasingly localised inside rather than outside patients’ bodies.

As ‘technologies of knowing’, biomarkers simultaneously reveal and consolidate the potential significance of specific agents of misfortune (Whyte et al. 2018, 105), becoming instrumental in the creation of more ‘personalised’ risk-based categories utilised in the prediction and prognosis of many types of cancer. When these markers have referred to pharmacologically ‘actionable’ targets, they have led to better tailored treatments (Nelson, Keating, and Cambrosio 2013). Clinically useful biomarkers distinguish subgroups of patients and determine, at least statistically, what the future holds for those tested through novel blood assays and imaging techniques.

The emergence of biomarkers in cancer biomedicine reinforces the role of evidence in clinical spaces (Löwy 2010), thus expanding the realm of what a disease can be considered to be (Aronowitz 2009). As such, the introduction of biomarkers into the clinic has facilitated changes in the temporal and relational qualities of emerging pre-disease conditions (Gibbon 2007), coupling detection practices with new medicalisation approaches, including surveillance regimes that use both imaging technologies and blood counts (Bell 2013) and the prescription of pharmaceutical products (Dumit 2012).

However, having developed some technologies to ascertain ‘risk’ at the molecular level, scientists and clinicians are aware of the difficulties of reading and interpreting ever more specific genetic results that might or might not cause trouble in the future (Kerr et al. 2019). To the predictive challenge of not knowing if/when misfortunes could eventually occur (since many genetic mutations remain indolent throughout a person’s lifespan), a further layer of complexity is foregrounded: that of intelligibility (Reardon 2017). Through the production of biomarkers, molecular data need to add value not only to clinical domains, but also to market circuits, which in turns makes research collaborations attractive to private pharmaceutical industries. As Aihwa Ong put it in her work among scientists developing a drug to action a novel ‘ethnic’ biomarker in lung cancer in Singapore, data must become ‘fungible’ (2016, 13).

Scholarship on cancer risk and biomarkers complements work in the history of science that has identified the situated conditions required to produce ‘objectivity’ (Shapin and Schaffer 2011)—that is, the process through which epistemic objects are defined (Rheinberger 1997)—and the ways in which those conditions challenge ideas of representationalism in the sciences (Bachelard 1953). Such scholarship offers insights into the relevance of the ‘laboratory’ as a site in which observation becomes an active intervention afforded by epistemic practices to produce phenomena (Hacking 1983, 189) and observed phenomena are transformed into workable objects (Myers 2015).

These scholars show the significance of ‘inscription devices’, which transform pieces of matter into written documents (Latour and Woolgar 1979, 51); performative practices, through which epistemic objects come to matter (Barad 2007); and the ways in which ‘facts’ are translated and taken to be objective representations of reality by multiple audiences (Dumit 2004).

Now, when phenomena sit at the edge of perception, only models are available to determine the accuracy with which technologies detect biological properties. In this article, I draw on scholarship that examines ‘experimental systems’ as arrangements that allow us to create cognitive spatiotemporal singularities; that is, those unprecedented events that produce novel knowledge forms (Rheinberger 1997, 23–27). Here, I show that new information produced through the purification of ‘raw signals’ detected in experimental systems is used to construct a biological referent that cannot be observed by the naked eye.

By paying attention to the construction and justification of experimental systems, we can learn how scientists develop analogical and translational thinking to extrapolate results across different domains (Nelson 2012). In order to do that, I build on Antonia Walford’s argument and show that scientists must grapple with the uncertainty between what is a signal and what is noise by paying attention to specific relational demands posed by ‘raw data’ (Walford 2017).

The use of research models in science foregrounds a distinction between what is ‘real’ and what is ‘representative’ that underlies the relations of substitution that make translation—that is, the anticipation of future effects on other domains—possible. In relation to the use of piglets in translational neonatal care research, Svendsen and Koch argue that: ‘What characterizes this moral economy is that the particulars of species-specific life forms are performed as universals’ (2013, S118).

Focusing on the construction of claims afforded by the use of mice models in studies of human behavioural disorders in the United States (US), Nicole Nelson (2012) gives an account of the practices through which behavioural animal geneticists adjust the riskiness of claims about mental health that result from their engagements with animal models. I draw on the scientists’ reported sense of awareness when discussing how and what findings can be translated into other domains. I explore too how group leaders instil a sense of responsibility among scientists still in training. In the context of the discovery and validation of candidate biomarkers for the early detection of cancer, I show how researchers reflect on the manner in which potential biomarkers could be implemented in the clinic and to what effect.

Following the bodies of literature outlined above, I collapse biological and technical aspects of scientific practice so as to better situate the ways in which those facts are produced through ongoing negotiations between people, instruments, and substances (Dumit 2004). Specifically, I explore how scientists engage with the signals emerging from experimental models to shed light on how they learn to see molecular and metabolic changes of relevance for the early detection of cancer.

Building experimental systems to see cancer before it exists

In an attempt to make sense of a vast and unfamiliar breadth of literature, I asked every one of the twenty-five scientists I interviewed to send me two papers in advance so that I could situate their work. From these encounters with people and their texts, I learnt that, to carry out research projects, scientists choose from a plethora of ‘experimental systems’ to model features of interest, pose questions, and obtain some answers that lead only to further questions (Rheinberger 1997). The experimental systems used by the scientists I met included computerised mathematical simulations (‘in silico models’) for those working in ‘dry labs’ and cell lines cultivated in petri dishes (‘in vitro models’), human-harvested tissue grown in external environments (‘ex vivo models’), and ‘animal models’ (mostly using genetically modified mice) for those working in ‘wet labs’.

Most of the research students I met would use experimental systems that had already been developed in other laboratories. They felt that, in the space of three to four years—the typical length of their postgraduate degrees—the risk of failing to develop something completely new was too high. This risk was approached differently by researchers who, having accrued tenure and experience, were involved in ‘blue skies research’. This was true of Sonia (pseudonym), a senior postdoctoral researcher with a background in stem-cell biology, who came to the research unit to ‘develop different systems’.

Sonia was surprised to find an anthropologist in the laboratories and was eager to talk to me. Because of safety regulations in her lab, she told me she could initially only give me an interview. She narrated how she had, over the past ten years, published significant work on the molecular understanding of breast cancer evolution using cell lines and immunocompromised mice while working in labs at three different universities. When the opportunity of a prestigious fellowship presented itself, she moved to this programme to work on the development of breast cancer ‘organoids’, her chosen experimental system.

She defined organoids for me as ‘small-scale and three-dimensional in vitro cultures cultivated from human tissue’, and explained that they had been gaining considerable attention among wet-lab scientists due to their ‘self-organising properties, enabling the study of interactions between different types of cells within the disease model’ they attempted to recreate. However, Sonia recognised that she was spending a lot of time figuring out ‘how to grow anything’, a remark that other scientists working with organoids echoed during fieldwork, which could further explain why she could not show me any experiments.

And then the narrative construction of an ‘epistemic scaffolding’ (Nelson 2012) unfolded. Perhaps because she was struggling to ‘grow anything’, Sonia wanted to convince me of the validity and usefulness of her new experimental system. She explained to me that even when able to grow something, the challenge for this breast cancer-mimicking model is to keep the cellular structure in the desired ‘doughnut shape’. During my interview, she picked up a piece of paper and drew a doughnut with a ring of circles covering the inner ring which, she said, represented a layer of luminal cells, a type of cell found in the mammary gland. It was there, she said, that breast cancer develops most of the time. Ideally, Sonia wanted the organoid to be able to ‘recapitulate’ the variations in breast density/stiffness observed in human breast tissues, a critical property limiting the sensitivity of current imaging techniques used for cancer diagnosis in humans. Sonia argued that if these organoid models worked for breasts that are dense or stiff, then scientists would be ‘one step ahead’ in terms of understanding what happens in more deep-seated tumours, such as the liver or pancreas.

Sonia wanted to better understand the natural history of breast cancer by developing an experimental system—an organoid—that would allow her to see how different cells interacted in the evolution of tumour growth in dense breast tissues. To accomplish this work, Sonia spent her time in the lab running a series of experiments to determine the best growth medium recipe. ‘Growth medium’ is a liquid or gel providing nutrients for cells to grow in vitro cultures. As Sonia explained, this medium is ‘very complicated to make if you want the cells to behave differently than normal’—that is, to behave like cancerous lesions. Here, the ‘epistemic scaffolding’ through which Sonia justified the link between organoids and breast cancer lesions in humans was ideal rather than material; it was a demonstration of intent, or a funding pitch, rather than an outcome already achieved.

Sonia’s attempt to grow organoids able to recapitulate the ‘doughnut shape’ and ‘stiffness’ of breast tissues in humans shows that the ‘discovery’ of signals used to understand cancer evolution might be brought about by the technology itself—that is, not ‘discovered’ at all. Without an experimental system that works in the desired way, there are no cellular interactions to observe. The process of bringing about phenomena through an array of techniques is what the French philosopher of science, Gaston Bachelard (1953), understood as phenometechniques.

Building on the latter idea, Latour and Woolgar posited that ‘it is not simply that phenomena depend on certain material instrumentation; rather, the phenomena are thoroughly constituted by the material setting of the laboratory’ (1979, 64). This argument has been echoed by Natasha Myers, who in her work with protein crystallographers in the United States described how scientists ‘make models to render the molecular world visible, tangible, and workable’ (2015, 18). Rather than ‘discovering’, what is at stake here is ‘rendering’; that is, the materialisation of an idea through human intervention.

The selection of the features that make up the model in the experimental system, such as the organoid’s desired ‘doughnut shape’ and ‘density’, requires careful consideration. This leads me to argue that, in order to understand what is going on across early detection research practices, we need to look closely at the process through which experimental systems render some biological attributes available for observation. Scientists must ‘tease apart the signals that are tumour specific’, as a group leader once put it, an exercise of attribution that adds meaning to the observed link between signals detected in experimental systems and biological features of cancer in humans.

In the next section, I explore some of the methods used by scientists to find meaning in the outputs of experimental systems; that is, how they establish the link between experimental systems and biological referents.

Finding meaning and producing ground truths

On my first day of fieldwork, Lila, the programme’s manager, came to welcome me in the little office I was allocated near the scientists’ laboratories. While talking about the options available to me in terms of accessing clinical studies for my next phase of fieldwork, I enquired about one pilot study that had recently gained some media attention. I asked her what she thought about the idea and she told me cryptically, ‘The [industry partner] does have a device, but they don’t have a signal; they don’t know what they are looking for.’ She quickly left the room to fix the advertisement of a forthcoming funding deadline and I was left to ponder the following questions: what do scientists understand as ‘signals’ of cancer before it even exists? How do they learn to see those signals?

Lila’s statement shows that experimental systems have a double edge in the production of candidate cancer biomarkers. What happens if/when meaning cannot be extracted from the system? Learning to see signals in experimental systems—that is, to identify molecular or metabolic features that define cancer risk and pre-cancerous lesions—unavoidably requires scientists to be able to look at processes not directly accessible to human perception, but only through the use of experimental systems, such as Sonia’s organoids. As Karen Barad (2007, 53), building on Hacking (1983), explained, ‘To see, one must actively intervene.’

Mobilising examples from discussions around the use of imaging techniques and genomic sequencing in the labs, I show that the process of learning to see cancer in experimental systems can be grasped by attending to the ways that scientists attribute the observations inscribed by experimental systems (the ‘signals’) to molecular referents.

Yet, as is also the case when imaging the brain using PET technologies (Dumit 2004), the very technology used to detect a signal (the ‘output of interest’) is what renders it visible. This is because there is no ‘ground truth’ or alternative proofs to rely on. How can scientists then argue that there is a link between a signal detected by a technology and a biological process of interest? Rose, a group leader in the research programme, patiently offered me an answer to that question.

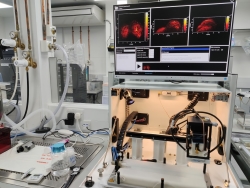

Rose leads a group working on the development of imaging technologies to shed light on the metabolic features of tumour evolution, such as tumour oxygenation. She explained to me that scientists working in her lab were working first to ascertain what they call ‘the underlying biology’ that underpins the referential domain of interest and then to optimise the signal, which can be observed in experimental systems over time and space regardless of the person operating the device. In her lab, scientists understand these two steps as ‘biological’ and ‘technical’ validation.

While she uses photoacoustic techniques,[1] Rose argues that the key step for an imaging signal to become a metabolic biomarker of cancer is ‘biological validation’. By correlating what happens to the detected signal as a binary output of an imaging device against what happens in relation to the ‘biological process’ (the differential optical absorption of oxygen in blood captured by the sensing technology), her group aims to get a closer understanding of the role of oxygenation in cancer.

They do this by manipulating the biological mechanisms that scientists believe produce the results they see in mice. For example, by controlling the concentration of oxygen that a mouse receives in an air-tight chamber while imaging occurs, her lab workers are able to observe what happens to the ‘raw signal’ standing for haemoglobin levels. In the case of the photoacoustic scanner, the signal is basically a sound wave that is visually represented by the device when cellular oxygenation is manipulated by the scientist. ‘If the [detected] signal on the photoacoustic scanner moves along the lines predicted by the biological hypothesis, then we can move on and develop the technical validation,’[2] Rose explained to me.

Here, we see that it is the relationships between instruments, animal models, and scientists that enable Rose to account for the relationship between photoacoustic markers and metabolic responses in tumour oxygenation (Shapin and Schaeffer 2011).

Besides photoacoustic imaging, genomic analysis is another way of ascertaining the biological validation of signals. Genomic analysis is achieved through the process of ‘knocking in’ and ‘knocking out’ genes in mouse models. As is the case in photoacoustic imaging, genomic researchers also seek to manipulate candidate molecular markers in controlled settings.

The kind of experimental reasoning driving these methods was showcased at one of the bi-monthly networking events that the research programme coordinates. Michael, a young-looking group leader in an associated institute, discussed some of the research carried out by his lab on the immune ‘regulatory mechanisms’ associated with the evolution of lung cancer in mice. Explaining the difference between ‘innate’ and ‘adaptive’ responses of cellular immune environments when immune cells are confronted by lung infections, Michael recounted a seemingly innumerable quantity of experiments in which scientists infected mice with allergens to trigger an inflammatory reaction in their lungs. Each of Michael’s PowerPoint slides recounted an experiment and was titled with the hypothesis orienting the experiment, which described the relationship between the molecular features to be tested (the biological link). This link was represented as a visual diagram with one-directional arrows and variables at each side.

Following an aesthetic of careful colour coordination, each experiment was presented in three steps on the slide: first, a cute cartoon of a mouse next to a syringe was the figure specifying whether any molecular pathway was knocked in or out in the mice. Next, a rustic timeline showed the time scale of the intervention protocol, defining the point at which an allergen was injected and the period that had lapsed between infection and observation (which required the culling of the mice). A third figure in the shape of histograms (graphs showing the concentration of the substance under consideration) differentiated between the control and treatment groups of mice.

A sentence summarising the results of each experimental design appeared in bold letters at the bottom of each slide. Strikingly, there was no experiment showing the falsification of a hypothesis. This meant that the audience did not know which tested molecular relationships had proven to be unsuccessful. Michael’s presentation was a winning narrative—a practice that, researchers commented, is also pervasive in, and sometimes incentivised by, peer-reviewed journals.

Michael’s presentation accomplished several functions. First, it completely erased the mice as living organisms and mobilised them as purely a component of homological relationships between animals and humans (Svendsen and Koch 2013). In other words, mice were sacrificed for the sake of usable knowledge to benefit human populations.[3] They were only visible in the shape of cute cartoons and transformed into data displayed in histograms (Rader 2004).

Second, the presentation connected molecular pathways relevant to the understanding of initial immune responses to cancer (a biological process) with the signals detected under the microscope. The links between molecules and signals were conflated in histograms presenting ‘clean’ results. The graphs demonstrated a mechanistic understanding of cancer evolution; the signals were suggested to travel through logical and recognisable molecular pathways that were amenable to observation (Myers 2015). Michael’s presentation also included experiments to control the many possible molecular mechanisms affecting his results to ensure robustness. As Rheinberger put it: ‘Every experimental scientist knows just how little a single experiment can prove or convince. To establish proof, an entire system of experiments and controls is needed’ (1997, 27).

Michael purported to persuade his audience that the relationships highlighted in the presentation rendered visible the underlying genomic causality between lung inflammatory lesions in mice and immune responses leading to cancer growth, but we did not learn about those associations that proved to be unsuccessful. In other words, by presenting the results of his experiments through a carefully curated aesthetic, Michael sought to establish and demonstrate a ‘ground truth’ that could not be seen without the interventions executed by the scientists who engaged with the experimental system.

Yet the production of this ‘ground truth’ was concealing the fact-making process. Not only was the living reality of the mice erased; so too were infrastructural collaborations that are frequently disregarded too, even though, like mice, they constitute an essential part of the practice of scientific fact-making. Without those looking after the wellbeing of the mice, the teams carrying out the bioinformatics analysis of genomic results, and those running the histological analyses of the lung tissues, Michael would have produced no connections between signals and molecules. In light of this, a detailed ‘acknowledgements’ slide at the end of the PowerPoint presentation acquired new meaning.

As Latour and Woolgar (1979) have argued, downplaying the techniques (and, I could add, the people involved in the production of scientific knowledge) makes ‘inscriptions generated by experimental systems look like direct indicators of the substance under study’ (1979, 63). Downplaying thus enables Michael, but also Rose, to talk about ‘ascertaining the biological referent of interest’ as if the biological referent is already there. Despite the resources available in the research programme, significant barriers are rising to complicate the process of associating detected signals with biological mechanisms, a process which could explain the natural histories of cancer. This is due, in part, to what scientists understand as ‘detection limits’, which compel them to make sense of what are ‘data’ versus what is ‘noise’ when interpreting raw data produced by experimental systems. I turn to this now.

‘Detection limits’: Understanding noise

Teasing out the ways in which scientists navigate detection limits offers insight into the relationships between substances, instruments, and people, which lab practitioners often aim to foreground at the expense of other interactions. I draw on two cases in which scientists try to find a signal standing for early cancer markers using genomic sequencing techniques. Drawing on Walford’s (2017, 70) work, I argue that obtaining and stabilising data about how malignant cells come about poses ‘particular relational demands’.

Camilla is a PhD student working on the genomic features that drive clonal haematopoiesis (a pre-malignant condition) into acute myeloid leukaemia (a type of blood cancer). She tells me that she has been spending most of the last year ‘optimising a sequencing method that would enable us to detect really, really low frequency variance mutations so that we could see when they first appeared in the blood’. Because of the low frequency of the molecular analyte (the ‘clones’) Camilla is interested in, she needs to learn to differentiate between signals in the DNA ‘sequencing reads’, or outputs, and errors produced by the sequencing method she uses.

Because Camilla’s work concerns the detection of rare genetic mutations long before cancer lesions start to appear, the experimental system she uses to sequence blood samples needs to be ‘ultra-sensitive’: it must be able to detect very small DNA changes. She explained that since those molecular changes are rare and small, it is very difficult to get a meaningful signal using the blood volume available from each sample obtained from the biobank, and she struggled to ‘tell the difference between sequencing errors versus PCR errors versus mutations’.

Camilla taught me that validation processes are not only required to verify the outputs produced by a given experimental system—for her experiment, she also needs to verify and standardise the sequencing library (i.e., the DNA fragments that she intends to analyse). Optimising the sequencing library (or ‘library prep’) here amounts to a first relational demand used to understand and diminish the variability of the outputs generated by the sequencing machine.

The optimisation of sequencing methods stems from well-known artefacts of the experimental system. These artefacts are introduced either while amplifying DNA during polymerase chain reaction (PCR) testing (a step carried out by scientists during sample preparation before sequencing takes place) or as errors introduced during genomic sequencing. Camilla told me, with some excitement, that she eventually got around this problem by using ‘barcodes’. These enabled her to track the DNA fragments, and therefore the signal, produced by the sequencing machine when typifying the mutations of interest.

Scientists like Camilla learn to see signals when confronted with the detection limits of experimental systems in different ways. Following Walford (2017), I argue that they do this by identifying the relational demands that ‘raw data’ makes on them. They must disentangle the myriad relationships arising from interactions between bodily substances, subjects, and experimental systems in order to ‘see’ cancer. The identification of the confounding relations that appear in the outputs as noise are ‘substituted’ by meaningful relations that offer relevant information (see Walford 2017, 74). This is what Andrew, another group leader, mentioned as the challenge of ‘teasing apart the tumour-specific signal’ in sequencing reads.

Andrew’s lab works on the epigenetic changes[4] that have been associated with the increased aggressiveness of some early prostate cancer lesions. He explained to me that, when trying to pick up the signal of rare genetic events, another way to overcome detection limits is through ‘signal enrichment’; that is, looking ‘for more markers in parallel’. He continued: ‘If we look for more rare events, that gives us a better chance of catching something, so then we look for an overall signal.’ As the specificity of the blood test improves through ‘signal enrichment’, scientists like Andrew argue that they can ascertain the presence of mutations that are due to cancer with more confidence. If noise is minimised, sequencing reads are more likely to capture analytes of interest in a sea of confounding background variations.

But Andrew’s solution is not straightforward. Mark, a postdoctoral researcher working in Andrew’s lab who uses computational methods to analyse those epigenetic changes, brought up the challenge of overcoming ‘detection limits’ he found while sequencing samples. Like Camilla, Mark struggled to capture a robust signal, indicating the presence of regions containing epigenetic changes in the DNA. In response to Mark’s presentation, Andrew asked group members: ‘How do we squeeze out more signal? Including more reads means including more noise too. Is the noise technical or biological?’

Here there is another relational demand that raw data posed to scientists: a trade-off that must be navigated. ‘Squeezing out more signal’ enables scientists to ascertain with more confidence whether outputs given by the machine can be referred to the analyte of interest. Yet, ‘squeezing out more signal’ also renders visible the other relationships that scientists want to discard: those described as ‘noise’.

Like Camilla, Andrew’s group knew that the variance in the observed signal could be due to the (epi)genome’s inherent heterogeneity, but it could also be due to any artefacts generated during the sequencing. This uncertainty exemplifies the difficulty of reliably ascertaining what constitutes ‘normal variation’, what is ‘pathological variation’, and what is instead an error introduced through the processes of sample preparation and sequencing when there is no ‘ground truth’ to compare with.

Building again on Walford’s argument regarding the scientific production of ‘raw data’ on the Amazonian forest, I understand cancer signals obtained by experimental systems to be ‘always a measurement plus something else’ (Walford 2017, 72). Hence, to stabilise something that is ‘inherently semiotically uncertain’ by learning what a signal should be referring to, scientists must cut the ‘connections to all sorts of other entities’ (ibid.). This is a crucial ‘relational demand’ imposed by molecular research through which scientists seek to understand the origins of malignant lesions.

Curation practices are required to create meaning in a sea of data and to bring about a candidate biomarker (see Reardon 2017). As Barad (2007) has explained, ‘the separation of fact from artifact depends on the proper execution of experimental practices’, which itself requires a great deal of expertise; how else can one determine ‘what constitutes a good outcome’ (Barad 2007, 53)? In this context, learning to see cancer before it exists requires not only being aware of the potential sources of noise, but also learning how to purify and delineate meaningful results brought about by the sensing technologies themselves (Rheinberger 1997, 28). The process of cutting certain connections to foreground others (Walford 2017) becomes part of a process to validate a signal, which, importantly, needs to stand the test of peer-review beyond the confines of the lab. In the next section, I describe the dynamics of one journal club session to elucidate what Rose understood as the ‘technical validation’ of some research participants.

Achieving reproducibility, managing uncertainty

After overcoming a detection limit and purifying a signal standing for a biological process of interest, scientists feel compelled to make sure that the experimental system used to detect the signal performs well in tests regardless of the scientist executing the experiment. This is what Rose defined as ‘technical validation’, and it concerns an important safeguard: candidate biomarkers must demonstrate reproducibility to be able to be incorporated in clinical settings. This is because blood test results must be reliable regardless of the person deploying them. Attending scientific journal clubs organised by research groups as part of their training was, I found, an insightful access point. There, I could observe how the reproducibility of facts generated through engagement with experimental systems is assessed by members of the research programme.

Journal clubs took place in weekly research update meetings. Group members would take turns to review a recently published paper in a top-tier journal of their choice and approach the task with incisive detail. On one occasion, Brian, a postdoc in Rose’s lab who worked on biophysical models of tumour oxygenation using in silico experiments, presented the work of another research group interested in developing an automated pipeline to analyse the quantity of cancer cells as they spread from the original tumour site and quantify the efficacy of antibody drug targeting via molecular imaging. This pipeline, the paper’s authors claimed, was developed to solve not only the inability of imaging technologies to detect micro-metastases in living tissue, but also to provide an automated algorithm to quantify large-scale imaging data. Brian explained that the paper’s authors claimed to be able to detect single tumour cells in mice, something no other method could, at that time, do.

Going through the supplementary material published alongside the article, Brian described the aims and results of the paper. As soon as the presentation was over, Brian’s colleagues started firing questions, criticising the study’s many methodological issues. In that paper, the signal standing for cancer metastases was represented with a red pixel on the image, while the signal standing for the presence of the antibody agent was represented with a green pixel. The paper’s authors claimed that if those signals overlapped, it meant that the metastasis was being targeted by the antibody agent. But Rose’s group did not buy it. They wondered why no other group had been able to reproduce this article’s results.

‘What are the factors intervening in the distance between red and green signals? Does it depend on tumour vasculature or other aspects of the tumour microenvironment?’ Lisa, a PhD student working on tumour oxygenation in mice, asked.

Tatiana, the group’s lab manager, seconded this question, suggesting that the signal found at the single-cell level could be noise instead. ‘It’s hard to know if there are no controls,’ she said.

To this intervention, Rose asked, ‘How can we know if it is cancer [and not noise] at the level of the single cell?’

Scientists in training in this research programme are expected to carefully consider the best ways to visualise data and to make claims about experimental results. Reviewing journal articles was a useful exercise because it allowed group members to better understand the academic publishing standards their own work would be beholden to. It also allowed them to learn about the challenges that others might face if attempting to reproduce their own results. Care is required to ensure that results can travel beyond the lab, even as their authors acknowledge the limitations of the circumstances of these results’ production. Exercises like this informed scientists’ worries that their ‘codes would not work and the paper would need to be retracted’; a terrifying prospect for many of the scientists, Camilla once told me.

Demonstrating reproducibility depends on scientists’ ability to articulate and justify the ways that others would be able to see the same features of interest that one is attempting to render visible. Put differently, they not only have to learn to see, but also visualise for others what cancer is before it becomes available through unmediated human perception. Learning to visualise demands the disentanglement of what should be considered as ‘noise’ or ‘artefacts’ from meaningful connections, as I have explained above.

How the scientists I met circumnavigate the question between fact and artefact depended on the availability of what they considered ‘ground truths’ and ‘gold standards’. Paraphrasing Tatiana’s words above, it is difficult to see the signal if there are no controls to create contrast. In the absence of samples from ‘healthy control groups’ against whom the signals standing for molecular processes of interest can be compared, claims can only be made ‘in silico’, meaning they rely completely on computerised mathematical models. Scientists know that in silico models are used for exploratory purposes only; ‘They tend to pose more questions and hypotheses than answers,’ Bob, a scientist modelling random changes due to sampling, told me once while going over his mesmerising graphs, which were in the shape of ‘funnel plots’.

Finding a signal and being able to trace it back to the biological referent, then, is not enough to produce a candidate biomarker. Scientists need to make sure that what they are seeing is cancer and not an artefact produced by the experimental system. This is tricky in early detection as there are no alternative proofs external to the experimental system to rely on. This absence makes the step of ensuring ‘reproducibility’ difficult to achieve. Therefore, scientists know that they cannot give the impression of too much certainty when communicating results to others; instead, they need to be aware of possible sources of error.

While communicating with others about the ‘discovery’ of facts concerning the natural histories of cancer, scientists navigate a space of uncertainty, forging some connections and disregarding others. ‘Robust’ results from multiple experiments, ‘clean’ distinctions in histograms, and ‘beautiful data’ are compelling allies in the process of fact-making, and all can help results travel beyond the laboratory and into the clinic. This, of course, goes on top of good storytelling. As Orit Halpern (2015) put it, ‘The shift toward “data-driven” research [has been] adjoined to a valorisation of visualisation as the benchmark of truth, and as a moral virtue [of the scientist]’ (2015, 148).

In the process of rendering molecular and metabolic patterns visible, uncertainty and negative results need to be acknowledged rather than obscured. Visuality, as an aesthetic category promoted by journals and publishers, can be misleading when discussing phenomena that sit at the edges of perception. As technologies of representation, journal papers and the results they contain aim to persuade others ‘about the structure of natural and social worlds’, but cannot be interpreted without reference to textual argumentation (Dumit 2004, 95–99). Scientists strive to give compelling accounts to allow for the emergence of facts describing the existence of novel biological realities. Still, they are simultaneously wary of erasing the social aspects of negotiation and the uncertainty that went into the making of those facts. At stake here is the confidence of the scientific community, and the general public, that scientific practices of early detection are indeed productive in halting tumour growth and alleviating human suffering.

Not giving the impression of too much certainty is necessary for scientists because of the many accountabilities in place in pre-clinical research. In her work with behavioural geneticists modelling mental health conditions in mice, Nelson (2012) describes how the scientists she worked alongside adjusted the riskiness of the claims they made about their experimental system. This was motivated by the fact that scientists working with experimental systems must ‘respond to many different matters of concern, from the strength of the available evidence for the validity of the test, to retaining professional credibility in the face of sceptical funding bodies [who think, based on public criticism, that scientists might be] overstating the capabilities of their knowledge-making tools’ (Nelson 2012, 24).

In the final section, I discuss how scientists make sense of the accountabilities that tie them to others beyond the lab. I look at how a notion of responsibility is imparted to research students and practitioners to account for the limits imposed by experimental systems. Following Myers (2015), I show how engagement with experimental systems does not only define the emergent properties of the system in question and the biological referent rendered visible, but also the emergent characteristics of the scientists in training.

Early detection of cancer: Opportunity or threat?

This early detection research programme is characterised by a distinct aesthetic of articulation: researchers’ narratives form a suite of novel tools and methods to uncover molecular and metabolic features associated with the development of cancer tumours, many of them referred to as potential candidate biomarkers.

The language of potentiality is relevant. As Taussig, Hoeyer, and Helmreich (2013) have argued, ‘Potentiality becomes a term with which one articulates worries and not just hopeful prospects, and it becomes linked to political decision and deliberation rather than the immanent mechanisms of nature’ (2013, S8). Drawing on this idea, I understand early detection research practices as structured by a discourse that articulates two different meanings of potentiality: the (biological) and deleterious inherent potential of cancer growth, and the (human-made) potential of the development of technologies able to halt that seemingly impending threat. I have shown that the intersection of both movements creates an epistemic space fraught with uncertainty.

My focus has been an analysis of the practices through which these movements painstakingly elicit potential homological relations between signals detected and validated in pre-clinical models and human bodies. To operationalise candidate biomarkers in the diagnosis of cancer, scientists must learn to see markers of pre-cancerous lesions through a deluge of data, a process that first necessitates figuring out what ‘[one] is looking for’, as Lila, the research manager, put it. Scientists develop techniques that seek to be sensitive enough to detect the signals that correspond to the tiny biological features of interest but specific enough to discriminate tumour-specific variations from others, which are, ideally, minimised. It is through the process of ‘biological validation’ that signals then acquire meaning, defining analytes of relevance (a biomarker) that stand for emergent ontological entities (a cancer susceptibility).

More specifically, I have shown that learning what counts as ‘signals’ in scientists’ experimental systems is intimately entangled with the sensing technologies and analysis techniques used. Without appropriate techniques to parse out and enrich signals, thus enabling the scientist to see metabolic or molecular changes of interest, there is no ontological entity to pin down. Following Walford (2017), I have argued that in order to learn to see cancer, scientists must deal with a series of relational demands through which some relationships are forged and others are dismissed. Dealing with these relational demands enables scientists to adjudicate detected metabolic or molecular features as ‘real changes’ in participants’ bodies and distinguish them from those that, they believe, should be considered ‘noise’ or ‘artefacts’.

In the absence of ground truths to rely on, I have described practices of learning that enable scientists to see cancer before it exists as a process of mutual articulation between scientists and their working objects. As McDonald observed in her ethnography on the practice of learning anatomy in medical education classes in the UK, there is not ‘a world analytically of either subjects or objects anterior to the relations that produce them’ (2014, 141). Importantly, in this case the process of mutual articulation is redefined by the necessary entanglement with experimental systems that mediate the emergence of features of interest.

This process of mutual articulation is productive of scientists’ understandings of their role as they learn to see cancer before it exists. We learn that choosing an experimental design has profound ethical implications for scientists who need to think through the consequences that test results might cast over patients’ lives. The need to communicate to future scientists the implications of translation is crucial for all group leaders. As Andrew once explained to his group in relation to the challenge of overcoming low detection limits, molecular features of interest might already be low, so, if an assay is incorporated in the clinic, ‘does it become a diagnostic opportunity or a threat?’

Here, Andrew’s sense of responsibility for what happens in the clinic feeds back into the strategies used to design experiments in the laboratory, pointing to wider implications of biological and technical validation processes done right. Detection limits might be improved by choosing ‘clearer’ controls, so differences in epigenetic markers of cancer tissues are better contrasted. Yet, as Andrew intimates, the assay might be introduced in a clinical space in which control groups are not necessarily that ‘clear’—they might be made up of older men ‘who already have something wrong’ even if they do not have prostate cancer.

The absence of ground truths and homologous control groups makes scientists aware of the risk of overtreatment and overdiagnosis. How do they attempt to diminish the error rate of their biomarkers to prevent these? The challenge is that the test will, in medical use, present confounding markers; ‘biological validation’ might not suffice to accomplish the ‘clinical validation’ of the test. In other words, research groups know that, to make the assays useful in the clinic, they need to make sure that the assay is indeed ‘capturing’ a candidate biomarker that can reliably identify, measure, or predict the aggressiveness of pre-cancerous lesions, and not something else, among the ‘real’ population of potential patients. If this is not the case, the test may generate more problems than solutions.

When researchers develop experimental systems to ‘discover’ early detection biomarkers, they do not only see their work as vital to bridging the gap generated by what are considered ‘unmet clinical needs’ by the oncological community, they also voice concerns about the risks of inadvertently promoting cancer ‘overdiagnosis’ in a context fraught with uncertainty. Here, the historical promise behind the injunction ‘do not delay’ in early detection is transformed. Scientists’ vision for the early detection of cancer is not backed up by ‘the assumptions about the natural history of cancer’, the ‘frustration over the lack of other effective prevention practices’, and the ‘responsibilisation’ of would-be patients (Arnowitz 2001, 359). Rather, researchers ask their audiences to agree on the need for a better understanding of the natural histories of cancer in plural. Those histories are no longer assumed—scientists are just beginning to really understand them in their complexity and variability.

This widespread sense of responsibility among researchers is seen in the intellectual movement that seeks to square the belief that early detection and risk stratification can promote a ‘stage shift’ from late to early diagnosis of cancer with the ever-present possibility of promoting overdiagnosis and overtreatment if candidate biomarkers do not discriminate well when applied to the concrete populations of patients in the clinic. Without accurate diagnosis, people who do not have cancer (or those whose cancer is indolent and would never cause problems) might be put through unnecessary treatments and face related medical risks. Following Friese’s work alongside scientists learning to engage in mice husbandry, the process of learning to see cancer before it exists is inherently associated with the need for scientists to ‘translate’ across experimental systems to the clinic in order to potentialise their research practices as ‘practices of care’ that could inform clinical decision making (Friese 2013).

All too aware that scientific facts travel beyond the lab (Dumit 2004), researchers focus on minimising error rates through the design of experimental systems and thinking carefully about where, in specific diagnostic pathways, their novel assays could best be deployed. In this context, ‘sensitivity’ and ‘specificity’—two statistical concepts to measure assay’s error rates—point to the performance of a specific assay and the responsibility scientists feel for the clinical consequences of identifying candidate biomarkers. The mobilisation of sensitivity and specificity scores could thus be understood as one way through which the scientific community brings into alignment commitments and practices to prevent early detection technologies from becoming a threat.

Recognising the importance of developing experimental systems that offer accurate and specific results, I find myself agreeing with Janet Carsten (2019), who, in her ethnography on the social life of blood in Malaysia, sketches the double process that structures laboratory technologists’ work. Following the practice of ‘detachment’, through which ‘the personal and moral attributes of blood’ are disentangled from the sample under study for its processing, a second practice of ‘re-humanisation’ occurs, imbuing seemingly tedious laboratory tasks with interest and ethical significance (Carsten 2019, 155–156). In my ethnographic context, scientists’ reflections on the ways in which experimental systems can be applied in early detection clinical settings and the potential errors of those systems best minimised represent one of the ways in which scientists enlarge the link between two seemingly independent domains. Through the crafting of a sense of responsibility, the laboratory as a site of experimentation is no longer secluded from the clinical dynamics it seeks to inform.

Acknowledgements

I would like to thank the scientists who engaged with this social anthropologist intruding on their working lives and academic spaces, as well as the group leaders who allowed me into their laboratories and patiently answered my many questions. Earlier drafts of this article benefited from insightful feedback from Maryon McDonald and Rosalie Allain. Special thanks to two anonymous reviewers at Medicine Anthropology Theory and the special issue editors Alice Street and Ann Kelly for their constructive comments.

About the author

Ignacia Arteaga Pérez is a research associate and an affiliated lecturer at the Department of Social Anthropology, University of Cambridge. She is also a research fellow at Robinson College, Cambridge. She obtained a PhD in anthropology from University College London in 2018. Ignacia’s current research, funded by the Philomathia Foundation, concerns the practices of multiple stakeholders involved in the fields of cancer detection in the UK.

Footnotes

-

Rose explained the photoacoustic imagining of tumour oxygenation to me in the following way:

Pulse light (in nanoseconds) is absorbed by haemoglobin in red cells. The absorption of light generates an acoustic wave which propagates out to the tissue surface. At the tissue surface we place an ultrasound transducer which collects the acoustic wave. Based on the time that the acoustic wave needs to travel, we can reconstruct where the optical absorption event took place. Hence, we create a picture of the map where all the absorption interactions are happening in the tissue. ↩︎

-

As I later explain, technical validation ensures that the experimental system used to detect the signal performs well in tests regardless of the scientist executing the experiment.↩︎

-

Lisa and other scientists working with mice are acutely aware of the relevance of minimising the use of animal models and animal suffering. Following the 3R guidelines for scientific research (see https://nc3rs.org.uk/the-3rs), they rely on statistical analysis to ‘power their results’, which then dictates the minimum number of mice needed to obtain statistically significant results. Moreover, they would challenge the quality of peer-reviewed publications based on these criteria, as I will shortly explain using the example of one of the ‘journal clubs’ I attended.↩︎

-

4 The scientists I met understood ‘epigenomic changes’ as external changes that, although they modify gene expression, do not alter the DNA sequence. An example of one such change that Andrew’s group work with is ‘methylation patterns’.↩︎

References

Armstrong, David. 2012. ‘Screening: Mapping Medicine’s Temporal Spaces’. Sociology of Health & Illness 34 (2): 177–193. https://doi.org/10.1111/j.1467-9566.2011.01438.x.

Aronowitz, Robert. 2001. ‘Do Not Delay: Breast Cancer and Time, 1900–1970’. Milbank Quarterly 79 (3): 355–386. https://doi.org/10.1111/1468-0009.00212.

Aronowitz, Robert. 2009. ‘The Converged Experience of Risk and Disease’. Milbank Quarterly 87 (2): 417–422. https://doi.org/10.1111/j.1468-0009.2009.00563.x.

Arteaga, Ignacia. 2021. ‘Game-Changing? When Biomarker Discovery and Patient Work Meet’. Medical Anthropology 14: 1–13. https://doi.org/10.1080/01459740.2020.1860960.

Barad, Karen. 2007. Meeting the Universe Halfway: Quantum Physics and the Entanglement of Matter and Meaning. Durham, NC: Duke University Press.

Bachelard, Gaston. 1953. Le matérialism rationnel. Paris: Presses Universitaires de France.

Blanchard, Anne, and Roger Strand. 2017. Cancer Biomarkers: Ethics, Economics and Society. Kokstad: Megaloceros Press.

Bell, Kirsten. 2013. ‘Biomarkers, the Molecular Gaze, and the Transformation of Cancer Survivorship’. Biosocieties 8 (2): 124–143. https://doi.org/10.1057/biosoc.2013.6.

Day, Sophie, Charles Coombes, Louise McGrath‐Lone, Claudia Schoenborn, and Helen Ward. 2017. ‘Stratified, Precision or Personalised Medicine? Cancer Services in the “Real World” of a London Hospital’. Sociology of Health & Illness 39 (1): 143–158. https://dx.doi.org/10.1111/1467-9566.12457.

Dumit, Joseph. 2004. Picturing Personhood: Brain Scans and Biomedical Identity. Princeton, NJ: Princeton University Press.

Dumit, Joseph. 2012. Drugs for Life: How Pharmaceutical Companies Define Our Health. Durham, NC: Duke University Press.

Friese, Carrie. 2013. ‘Realizing Potential in Translational Medicine: The Uncanny Emergence of Care as Science’. Current Anthropology 54 (S7): S129–S138. https://doi.org/10.1086/670805.

Gibbon, Sahra. 2007. Breast Cancer Genes and the Gendering of Knowledge. London: Palgrave Macmillan.

Gillespie, Chris. 2012. ‘The Experience of Risk as “Measured Vulnerability”: Health Screening and Lay Uses of Numerical Risk’. Sociology of Health & Illness 34 (2): 194–207. https://doi.org/10.1111/j.1467-9566.2011.01381.x.

Hacking, Ian. 1983. Representing and Intervening: Introductory Topics in the Philosophy of Natural Science. Cambridge: Cambridge University Press.

Halpern, Orit. 2015. Beautiful Data: A History of Vision and Reason Since 1945. Durham, NC: Duke University Press.

Kerr, Anne, Julia Swallow, Choon-Key Chekar, and Sarah Cunningham-Burley. 2019. ‘Genomic Research and the Cancer Clinic: Uncertainty and Expectations in Professional Accounts’. New Genetics and Society 38 (2): 222–239. https://doi.org/10.1080/14636778.2019.1586525.

Latour, Bruno, and Steve Woolgar. 1979. Laboratory Life: The Social Construction of Scientific Facts. London: Sage Publications.

Latour, Bruno. 2004. ‘How to Talk about the Body? The Normative Dimension of Science Studies’. Body & Society 10 (2–3): 205–229. https://doi.org/10.1177/1357034X04042943.

Löwy, Ilana. 1992. ‘The Strength of Loose Concepts: Boundary Concepts, Federal Experimental Strategies and Disciplinary Growth. The Case of Immunology’. History of Science 30: 373–96. https://doi.org/10.1177/007327539203000402.

Löwy, Ilana. 2010. Preventive Strikes: Women, Precancer, and Prophylactic Surgery. Baltimore, MD: John Hopkins University Press.

McDonald, Maryon. 2014. ‘Bodies and Cadavers’. Harvey, Penny, Eleanor Conlin Casella, Gillian Evans, Hannah Knox, Christine McLean, Elizabeth B. Silva, Nicholas Thoburn, et al (eds). Objects and Materials: A Routledge Companion. London: Routledge. 128–143.

Myers, Natasha. 2015. Rendering Life Molecular: Models, Modelers, and Excitable Matter. Durham, NC: Duke University Press.

Nelson, Nicole. 2012. ‘Modelling Mouse, Human, and Discipline: Epistemic Scaffolds in Animal Behaviour Genetics’. Social Studies of Science 43 (1): 3–29. https://doi.org/10.1177/0306312712463815.

Nelson, Nicole, Peter Keating, and Alberto Cambrosio. 2013. ‘On Being “Actionable”: Clinical Sequencing and the Emerging Contours of a Regime of Genomic Medicine in Oncology’. New Genetics and Society 32 (4): 405–428. https://doi.org/10.1080/14636778.2013.852010.

Ong, Aihwa. 2016. Fungible Life: Experiment in the Asian City of Life. Durham, NC: Duke University Press.

Rader, Karen. 2004. Making Mice: Standardizing Animals for American Biomedical Research, 1900–1955. Princeton, NJ: Princeton University Press.

Reardon, Jenny. 2017. The Postgenomic Condition: Ethics, Justice, and Knowledge after the Genome. Chicago, IL: University of Chicago Press.

Rheinberger, Hans-Jörg. 1997. Toward a History of Epistemic Things: Synthesizing Proteins in the Test Tube. Stanford, CA: Stanford University Press.

Rose, Nikolas. 2007 The Politics of Life Itself: Biomedicine, Power, and Subjectivity in the Twenty-First Century. Princeton, NJ: Princeton University Press.

Samimian-Darash, Limor, and Paul Rabinow (eds). 2015. Modes of Uncertainty: Anthropological Cases. Chicago, IL: University of Chicago Press.

Ross, Emily, Julia Swallow, Anne Kerr, and Sarah Cunningham-Burley. 2018. ‘Online Accounts of Gene Expression Profiling in early-Stage Breast Cancer: Interpreting Genomic Testing for Chemotherapy Decision Making’. Health Expectations 22 (1): 74–82. https://doi.org/10.1111/hex.12832.

Shapin, Steven, and Simon Schaffer. 2011. Leviathan and the Air-Pump: Hobbes, Boyle, and the Experimental Life. Princeton, NJ: Princeton University Press.

Svendsen, Mette, and Lene Koch. 2013. ‘Potentializing the Research Piglet in Experimental Neonatal Research’. Current Anthropology 54 (S7): S118–S128. https://doi.org/10.1086/671060.

Taussig, Karen-Sue, Klaus Hoeyer, and Stefan Helmreich. 2013. ‘The Anthropology of Potentiality in Biomedicine’. Current Anthropology 54 (S7): S3–S14. https://doi.org/10.1086/671401.

Tutton, Richard. 2014. Genomics and the Reimagining of Personalized Medicine. London: Ashgate.

Whyte, Susan, Michael Whyte, and David Kyaddondo. 2018. ‘Technologies of Inquiry: HIV Tests and Divination’. HAU: Journal of Ethnographic Theory 8 (1–2): 97–108. https://doi.org/10.1086/698359.